Increasing RxODE speed by multi-subject parallel solving

RxODE originally developed as an ODE solver that allowed an ODE solve for a single subject. This flexibility is still supported.

The original code from the RxODE tutorial is below:

library(RxODE)

#> RxODE 1.1.1 using 1 threads (see ?getRxThreads)

#> no cache: create with `rxCreateCache()`

library(microbenchmark)

library(ggplot2)

mod1 <- RxODE({

C2 = centr/V2;

C3 = peri/V3;

d/dt(depot) = -KA*depot;

d/dt(centr) = KA*depot - CL*C2 - Q*C2 + Q*C3;

d/dt(peri) = Q*C2 - Q*C3;

d/dt(eff) = Kin - Kout*(1-C2/(EC50+C2))*eff;

eff(0) = 1

})

## Create an event table

ev <- et() %>%

et(amt=10000, addl=9,ii=12) %>%

et(time=120, amt=20000, addl=4, ii=24) %>%

et(0:240) ## Add Sampling

nsub <- 100 # 100 sub-problems

sigma <- matrix(c(0.09,0.08,0.08,0.25),2,2) # IIV covariance matrix

mv <- rxRmvn(n=nsub, rep(0,2), sigma) # Sample from covariance matrix

CL <- 7*exp(mv[,1])

V2 <- 40*exp(mv[,2])

params.all <- cbind(KA=0.3, CL=CL, V2=V2, Q=10, V3=300,

Kin=0.2, Kout=0.2, EC50=8)For Loop

The slowest way to code this is to use a for loop. In this example we will enclose it in a function to compare timing.

Running with apply

In general for R, the apply types of functions perform better than a for loop, so the tutorial also suggests this speed enhancement

runSapply <- function(){

res <- apply(params.all, 1, function(theta)

mod1$run(theta, ev, cacheEvent=FALSE)[, "eff"])

}Run using a single-threaded solve

You can also have RxODE solve all the subject simultaneously without collecting the results in R, using a single threaded solve.

The data output is slightly different here, but still gives the same information:

Run a 2 threaded solve

RxODE supports multi-threaded solves, so another option is to have 2 threads (called cores in the solve options, you can see the options in rxControl() or rxSolve()).

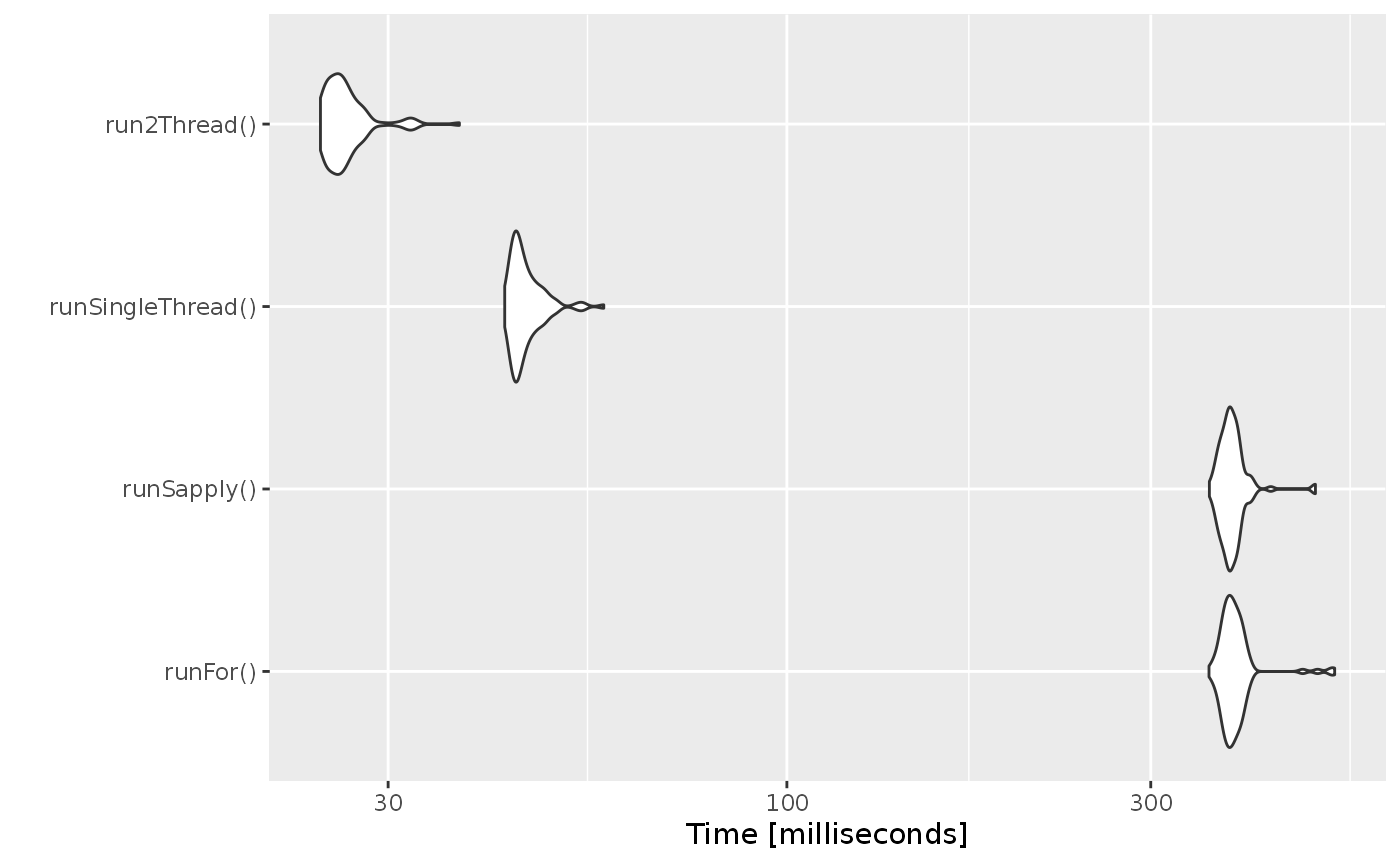

Compare the times between all the methods

Now the moment of truth, the timings:

bench <- microbenchmark(runFor(), runSapply(), runSingleThread(),run2Thread())

print(bench)

#> Unit: milliseconds

#> expr min lq mean median uq max

#> runFor() 357.6757 375.90410 387.9123 382.8639 392.28010 522.7531

#> runSapply() 358.0896 373.92735 384.2958 381.6122 388.96140 493.2533

#> runSingleThread() 42.6584 43.97855 45.5308 44.7217 46.38570 57.5327

#> run2Thread() 24.4560 25.12590 26.4667 25.9961 26.94145 37.2027

#> neval

#> 100

#> 100

#> 100

#> 100

autoplot(bench)

#> Coordinate system already present. Adding new coordinate system, which will replace the existing one.

It is clear that the largest jump in performance when using the solve method and providing all the parameters to RxODE to solve without looping over each subject with either a for or a sapply. The number of cores/threads applied to the solve also plays a role in the solving.

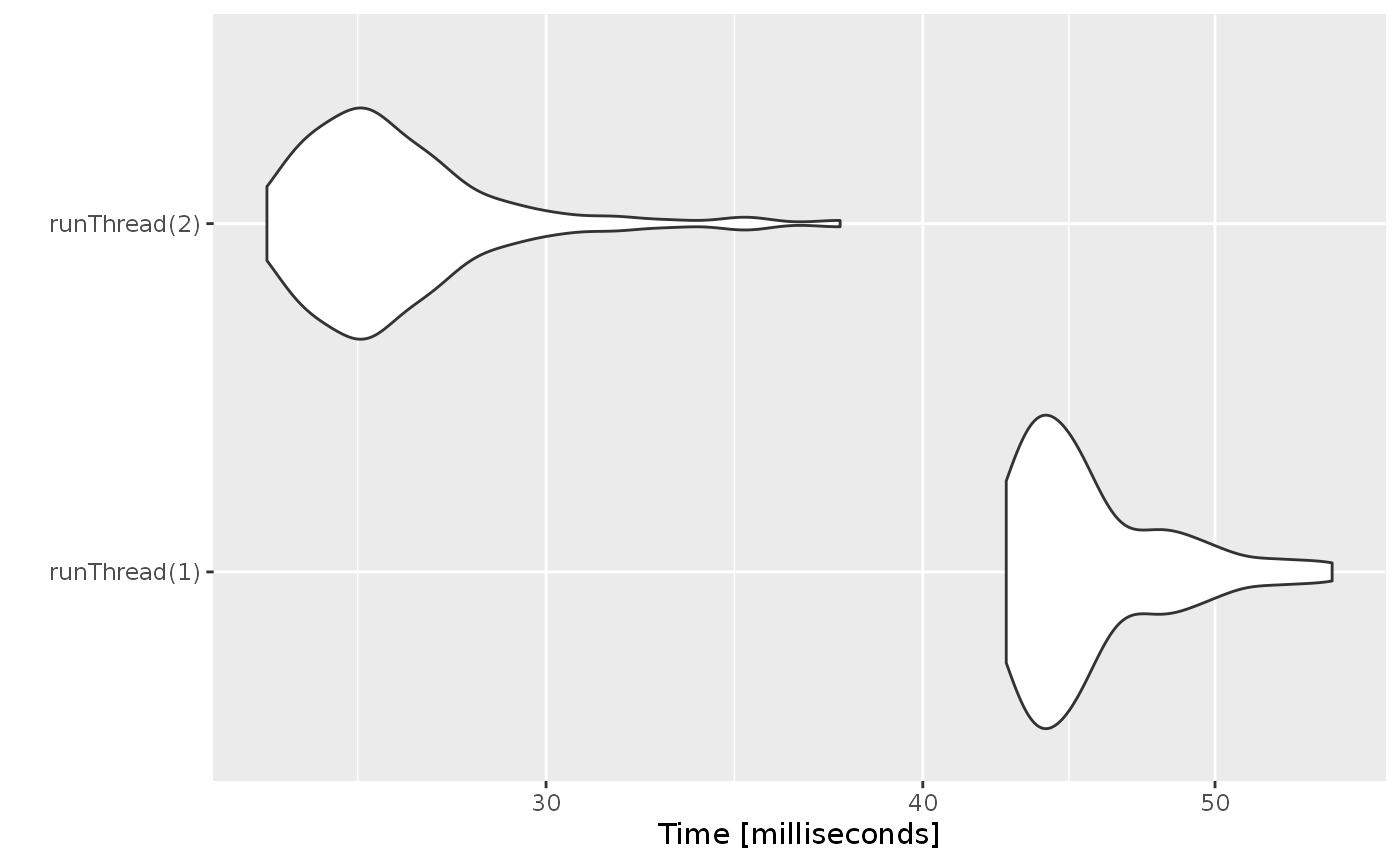

We can explore the number of threads further with the following code:

runThread <- function(n){

solve(mod1, params.all, ev, cores=n, cacheEvent=FALSE)[,c("sim.id", "time", "eff")]

}

bench <- eval(parse(text=sprintf("microbenchmark(%s)",

paste(paste0("runThread(", seq(1, 2 * rxCores()),")"),

collapse=","))))

print(bench)

#> Unit: milliseconds

#> expr min lq mean median uq max neval

#> runThread(1) 42.6319 43.63525 45.53822 44.69030 47.03000 54.6774 100

#> runThread(2) 24.2415 25.42905 26.85204 26.29105 27.44465 37.5535 100

autoplot(bench)

#> Coordinate system already present. Adding new coordinate system, which will replace the existing one.

There can be a suite spot in speed vs number or cores. The system type (mac, linux, windows and/or processor), complexity of the ODE solving and the number of subjects may affect this arbitrary number of threads. 4 threads is a good number to use without any prior knowledge because most systems these days have at least 4 threads (or 2 processors with 4 threads).

A real life example

Before some of the parallel solving was implemented, the fastest way to run RxODE was with lapply. This is how Rik Schoemaker created the data-set for nlmixr comparisons, but reduced to run faster automatic building of the pkgdown website.

library(RxODE)

library(data.table)

#Define the RxODE model

ode1 <- "

d/dt(abs) = -KA*abs;

d/dt(centr) = KA*abs-(CL/V)*centr;

C2=centr/V;

"

#Create the RxODE simulation object

mod1 <- RxODE(model = ode1)

#Population parameter values on log-scale

paramsl <- c(CL = log(4),

V = log(70),

KA = log(1))

#make 10,000 subjects to sample from:

nsubg <- 300 # subjects per dose

doses <- c(10, 30, 60, 120)

nsub <- nsubg * length(doses)

#IIV of 30% for each parameter

omega <- diag(c(0.09, 0.09, 0.09))# IIV covariance matrix

sigma <- 0.2

#Sample from the multivariate normal

set.seed(98176247)

rxSetSeed(98176247)

library(MASS)

mv <-

mvrnorm(nsub, rep(0, dim(omega)[1]), omega) # Sample from covariance matrix

#Combine population parameters with IIV

params.all <-

data.table(

"ID" = seq(1:nsub),

"CL" = exp(paramsl['CL'] + mv[, 1]),

"V" = exp(paramsl['V'] + mv[, 2]),

"KA" = exp(paramsl['KA'] + mv[, 3])

)

#set the doses (looping through the 4 doses)

params.all[, AMT := rep(100 * doses,nsubg)]

Startlapply <- Sys.time()

#Run the simulations using lapply for speed

s = lapply(1:nsub, function(i) {

#selects the parameters associated with the subject to be simulated

params <- params.all[i]

#creates an eventTable with 7 doses every 24 hours

ev <- eventTable()

ev$add.dosing(

dose = params$AMT,

nbr.doses = 1,

dosing.to = 1,

rate = NULL,

start.time = 0

)

#generates 4 random samples in a 24 hour period

ev$add.sampling(c(0, sort(round(sample(runif(600, 0, 1440), 4) / 60, 2))))

#runs the RxODE simulation

x <- as.data.table(mod1$run(params, ev))

#merges the parameters and ID number to the simulation output

x[, names(params) := params]

})

#runs the entire sequence of 100 subjects and binds the results to the object res

res = as.data.table(do.call("rbind", s))

Stoplapply <- Sys.time()

print(Stoplapply - Startlapply)

#> Time difference of 10.49439 secsBy applying some of the new parallel solving concepts you can simply run the same simulation both with less code and faster:

rx <- RxODE({

CL = log(4)

V = log(70)

KA = log(1)

CL = exp(CL + eta.CL)

V = exp(V + eta.V)

KA = exp(KA + eta.KA)

d/dt(abs) = -KA*abs;

d/dt(centr) = KA*abs-(CL/V)*centr;

C2=centr/V;

})

omega <- lotri(eta.CL ~ 0.09,

eta.V ~ 0.09,

eta.KA ~ 0.09)

doses <- c(10, 30, 60, 120)

startParallel <- Sys.time()

ev <- do.call("rbind",

lapply(seq_along(doses), function(i){

et() %>%

et(amt=doses[i]) %>% # Add single dose

et(0) %>% # Add 0 observation

## Generate 4 samples in 24 hour period

et(lapply(1:4, function(...){c(0, 24)})) %>%

et(id=seq(1, nsubg) + (i - 1) * nsubg) %>%

## Convert to data frame to skip sorting the data

## When binding the data together

as.data.frame

}))

## To better compare, use the same output, that is data.table

res <- rxSolve(rx, ev, omega=omega, returnType="data.table")

endParallel <- Sys.time()

print(endParallel - startParallel)

#> Time difference of 0.165817 secsYou can see a striking time difference between the two methods; A few things to keep in mind:

RxODEuse the thread-safe sitmothreefryroutines for simulation ofetavalues. Therefore the results are expected to be different (also the random samples are taken in a different order which would be different)This prior simulation was run in R 3.5, which has a different random number generator so the results in this simulation will be different from the actual nlmixr comparison when using the slower simulation.

This speed comparison used

data.table.RxODEusesdata.tableinternally (when available) try to speed up sorting, so this would be different than installations wheredata.tableis not installed. You can force RxODE to useorder()when sorting by usingforderForceBase(TRUE). In this case there is little difference between the two, though in other examplesdata.table’s presence leads to a speed increase (and less likely it could lead to a slowdown).

Want more ways to run multi-subject simulations

The version since the tutorial has even more ways to run multi-subject simulations, including adding variability in sampling and dosing times with et() (see RxODE events for more information), ability to supply both an omega and sigma matrix as well as adding as a thetaMat to R to simulate with uncertainty in the omega, sigma and theta matrices; see RxODE simulation vignette.

Session Information

The session information:

sessionInfo()

#> R version 4.1.1 (2021-08-10)

#> Platform: x86_64-pc-linux-gnu (64-bit)

#> Running under: Ubuntu 16.04.7 LTS

#>

#> Matrix products: default

#> BLAS: /usr/lib/openblas-base/libblas.so.3

#> LAPACK: /usr/lib/libopenblasp-r0.2.18.so

#>

#> locale:

#> [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

#> [3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

#> [5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=en_US.UTF-8

#> [7] LC_PAPER=en_US.UTF-8 LC_NAME=C

#> [9] LC_ADDRESS=C LC_TELEPHONE=C

#> [11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

#>

#> attached base packages:

#> [1] stats graphics grDevices utils datasets methods base

#>

#> other attached packages:

#> [1] MASS_7.3-54 data.table_1.14.0 ggplot2_3.3.5

#> [4] microbenchmark_1.4-7 RxODE_1.1.1

#>

#> loaded via a namespace (and not attached):

#> [1] tidyselect_1.1.1 xfun_0.25 bslib_0.2.5.1

#> [4] purrr_0.3.4 colorspace_2.0-2 vctrs_0.3.8

#> [7] generics_0.1.0 htmltools_0.5.2 yaml_2.2.1

#> [10] utf8_1.2.2 rlang_0.4.11 pkgdown_1.6.1.9001

#> [13] jquerylib_0.1.4 pillar_1.6.2 withr_2.4.2

#> [16] glue_1.4.2 lifecycle_1.0.0 stringr_1.4.0

#> [19] munsell_0.5.0 gtable_0.3.0 ragg_1.1.3

#> [22] stringfish_0.15.2 memoise_2.0.0 evaluate_0.14

#> [25] RApiSerialize_0.1.0 knitr_1.33 fastmap_1.1.0

#> [28] sys_3.4 fansi_0.5.0 highr_0.9

#> [31] Rcpp_1.0.7 scales_1.1.1 backports_1.2.1

#> [34] checkmate_2.0.0 cachem_1.0.6 desc_1.3.0

#> [37] RcppParallel_5.1.4 jsonlite_1.7.2 farver_2.1.0

#> [40] mime_0.11 systemfonts_1.0.2 fs_1.5.0

#> [43] textshaping_0.3.5 digest_0.6.27 stringi_1.7.4

#> [46] lotri_0.3.1 dplyr_1.0.7 qs_0.25.1

#> [49] grid_4.1.1 rprojroot_2.0.2 cli_3.0.1

#> [52] tools_4.1.1 dparser_1.3.1-4 magrittr_2.0.1

#> [55] sass_0.4.0 tibble_3.1.4 PreciseSums_0.4

#> [58] crayon_1.4.1 pkgconfig_2.0.3 ellipsis_0.3.2

#> [61] rmarkdown_2.10 R6_2.5.1 units_0.7-2

#> [64] compiler_4.1.1